*In this context "humanism" refers specifically to secular humanism and excludes religious humanism. I'm just following the way that secular humanists most often describe themselves.

**tongue in cheek

There are two common objections to identifying humanism with atheism+. First, humanists are too much on the diplomatic side when many people in atheism+ wish to be more confrontational. Second, humanists seem concerned with the project of finding secular ways to fulfill the needs usually fulfilled by religion. Personally, I think both of these objections are based on mischaracterizations of humanist values. I think people's impressions are distorted by the Harvard Humanist Chaplaincy, which gets a lot of press but isn't that big. It would be better to refer to the Council for Secular Humanism (CSH) or the American Humanist Association (AHA), who do not seem to express such values.

I'm partial to a third objection: As Greta said:

I would like to point out that humanism is hardly immune to the problems we’ve been talking about here — the problems that Atheism Plus is working to address.Just because humanists claim to address social justice issues doesn't mean they do it well. Most anti-feminists fancy themselves to be in favor of gender equality, but at the same time fight against it. In their view, we've nearly achieved gender equality already, and feminists are just trying to tip the scales against men. I am not saying that humanists are anti-feminist, I'm saying that when a group purports to be in favor of social justice, we can't take their word for it. We have to scrutinize them. This applies to humanists, and it applies to atheism+ too!

Many humanist groups have a huge diversity problem.

To give away my biases, I actively dislike humanism. It's not so much that I disagree with the principles, it's that I think they are too vague and I disagree with the attitude that leads to such vague principles. From the CSH:

Secular humanists believe human values should express a commitment to improve human welfare in this world. (Of course, human welfare is understood in the context of our interdependence upon the environment and other living things.) Ethical principles should be evaluated by their consequences for people, not by how well they conform to preconceived ideas of right and wrong.Improving human welfare? Consequentialist metaethics? Benevolence? It's a frustrating mixture of high-minded philosophy and undefined feel-good values. Note that there's no mention of feminism, ethnic minorities, or any social justice causes at all. They're in favor of improving human welfare, but how do I know whether they think that includes feminism?

...

Indeed, say secular humanists, the basic components of effective morality are universally recognized. Paul Kurtz has written of the “common moral decencies”—qualities including integrity, trustworthiness, benevolence, and fairness.

Some of these concerns are put to rest by Ron Lindsay (the head of CFI, CSH's parent organization). He explicitly mentions several specific causes fought by CFI.

CFI has long been active in supporting LGBT equality, in supporting reproductive rights, in supporting equality for women, in opposing suppression of women and minorities, not just in the US but in other countries, in supporting public schools, in advocating for patient’s rights, including the right to assistance in dying, in fighting restrictions on the teaching of evolution, in opposing religious interference with health care policy, in promoting the use of science in shaping public policy, in safeguarding our rights to free speech, and in protecting the rights of the nonreligious.Ron Lindsay says these issues are constrained to those where religion or pseudoscience have a big impact, and constrained to what they can do with limited resources. Good for CFI. Maybe I am wrong about the humanist community.

If we want to explore a little more common humanist attitudes towards social justice, we can check out humanist publications (which can express views unconstrained by organizational resources).

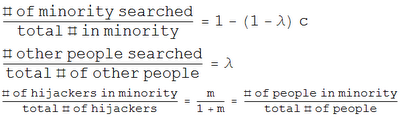

I propose an experiment. I will search the CSH website and The Humanist (a magazine put out by AHA) for the word "ethnicity" (or similar), and sample the results at random. For comparison, I'll try searching the AtheismPlus forum as well, though it may be too young to find anything. I'm looking for a particular attitude that I think separates a good social justice advocate from someone who just wants to be a social justice advocate. Namely, I am looking for colorblind ideology, the insistence that you don't see race. Colorblindness is a way to ignore racial disparities while excusing yourself from any responsibility to fix them.

If the humanist websites mostly express colorblindness, I consider that a strike against them. If they don't express colorblindness, or explicitly reject it, I would be impressed.

Here I pause. Does my procedure sound any good? What's your prediction of the results?